Mastering Real-Time Game Analytics with Kafka and Node.js

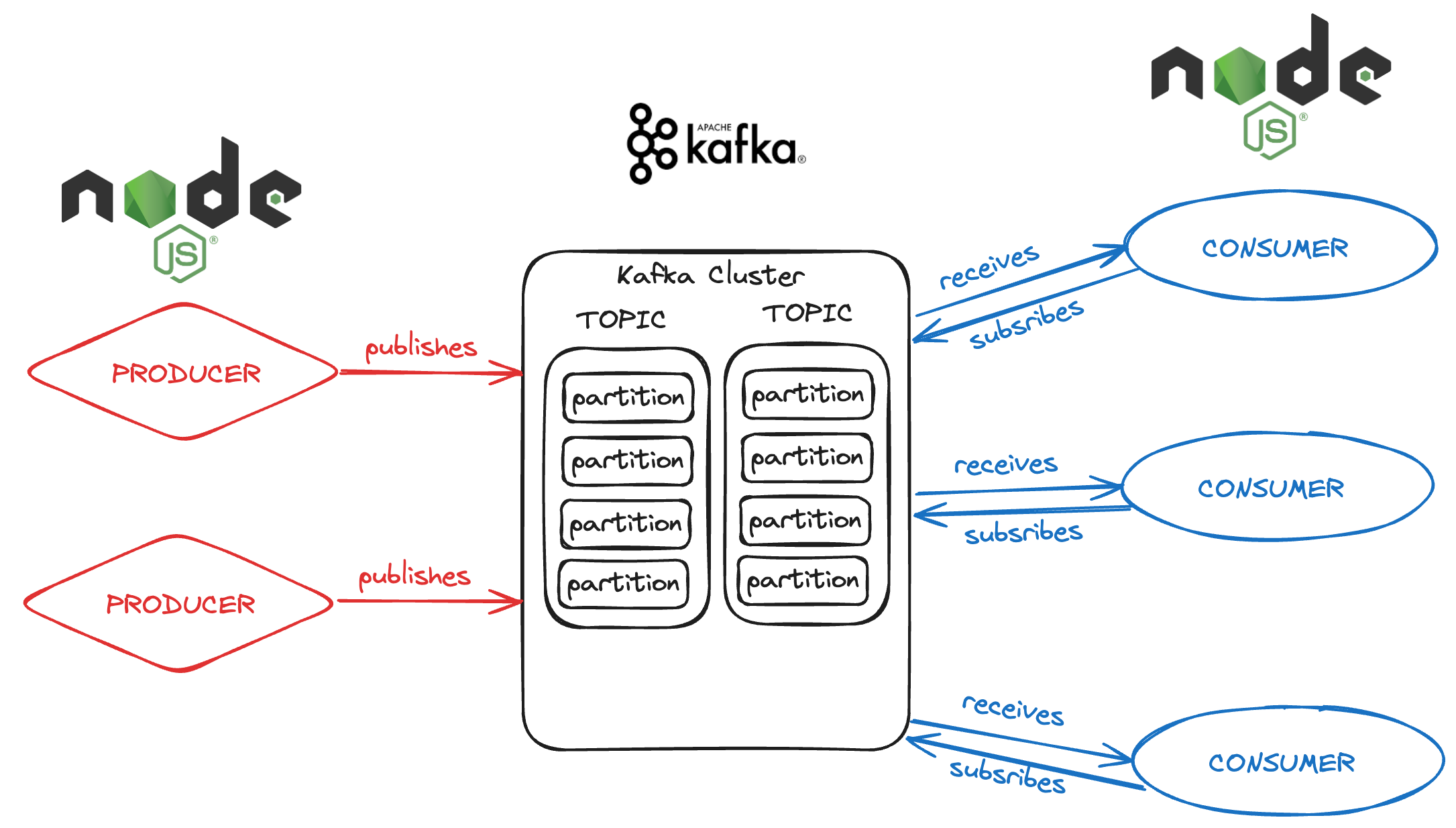

In the vast ocean of modern web development, data streams are the tides that shape our digital experiences. Apache Kafka stands as the lighthouse, guiding the flow of data, while Node.js sails the seas with its event-driven winds, and Docker ensures the journey is consistent and isolated. In this article, we will navigate through setting up a Kafka data streaming platform with Node.js, all encapsulated in the reliable containerization provided by Docker.

The Pillars of Our Data Odyssey Before we set sail, let's acquaint ourselves with the crew of our voyage:

Apache Kafka: A distributed streaming platform capable of handling trillions of events a day. Node.js: A JavaScript runtime built on Chrome's V8 engine, known for its event-driven, non-blocking I/O model. Docker: A platform for developing, shipping, and running applications inside lightweight, portable containers. Docking Kafka with Docker Running Kafka in Docker simplifies dependency management and ensures that our Kafka environment is consistent across all development and production systems.

First, we'll need a docker-compose.yml file to define our Kafka service:

version: '3.7'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- 2181:2181

kafka:

image: confluentinc/cp-kafka:latest

depends_on:

- zookeeper

ports:

- 9092:9092

- 29092:29092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:9092,PLAINTEXT_HOST://localhost:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

Run docker-compose up to lift the anchors on your Kafka broker and Zookeeper, which manages the broker.

Node.js as Our First Mate With Kafka running, let's turn our attention to Node.js, which will be the workhorse of our data streaming application.

Building the Producer Our producer's job is to publish messages to Kafka. Below is a Node.js script that does just that:

// producer.js

const { Kafka } = require('kafkajs');

async function startProducer() {

const kafka = new Kafka({

clientId: 'my-producer',

brokers: ['localhost:9092'],

});

const producer = kafka.producer();

await producer.connect();

// Repeat every 1000ms

setInterval(async () => {

try {

await producer.send({

topic: 'user-signups',

messages: [{ value: 'New user signed up!' }],

});

console.log('Message published');

} catch (error) {

console.error('Error publishing message', error);

}

}, 1000);

}

startProducer();

Building the Consumer Our consumer listens to messages from Kafka and does something with them—in this case, logging to the console:

// consumer.js

const { Kafka } = require('kafkajs');

async function startConsumer() {

const kafka = new Kafka({

clientId: 'my-consumer',

brokers: ['localhost:9092'],

groupId: 'user-signups-group',

});

const consumer = kafka.consumer();

await consumer.connect();

await consumer.subscribe({ topic: 'user-signups', fromBeginning: true });

await consumer.run({

eachMessage: async ({ message }) => {

console.log(`Received message: ${message.value}`);

},

});

}

startConsumer();

Conclusion: Navigating the Stream With Kafka handling the data streams, Node.js processing the data, and Docker ensuring that our environment is consistent, we have a robust system for real-time data processing.

Whether you're tracking user activities, processing IoT data, or handling financial transactions, this setup provides you a resilient and scalable architecture. So, hoist the sails with Kafka, Node.js, and Docker, and set forth into the horizon where real-time data processing awaits.

Remember, with great power comes great responsibility—use these tools wisely to ensure that your data streams are not only powerful but also secure, reliable, and efficient. Happy streaming!